Mick Grierson: Maximilian and OpenFrameworks

Thursday 24/11/2011

Maximilian is an open source C++ toolkit for audio dsp.

https://github.com/micknoise/Maximilian

http://maximilian.strangeloop.co.uk

Maximilian is an open source, MIT licensed C++ audio synthesis library. It’s designed to be cross platform and simple to use. The syntax and program structure are based on the popular ‘Processing’ environment. Maximilian provides standard waveforms, envelopes, sample playback, resonant filters, and delay lines. In addition, equal power stereo, quadraphonic and 8-channel ambisonic support is included. There’s also Granular synthesisers with Timestretching, FFTs and some Music Information Retrieval stuff.

There’s a command line version, and an openFrameworks addon called ofxMaxim. It also works well with Cinder, or just about anything else.

Electronic music legends are back

I’m sure that many of you are just like me. “If I only had [insert piece of gear of your dreams] My music would be so much better”. The grass is always greener on the other side. When I first started tinkering with electronic music in my teens me and a few friends had access to small MIDI studio at a youth centre run by the council. You had to book in to use it. We had an Ensoniq EPS 16+ Sampler, a Roland Juno 106 and some crappy sound canvas thing. None of us had a portable CD player and we where to dumb to realise that we could squeeze a fair bit of interesting sounds out of the Juno 106 for sampling purposes. What we did was to record sound samples onto cassette of my Amiga home computer, and then sample them resulting in super low fi sounds. We hated it, we where dreaming of clean sounds without noise and the possibility to record the result on to DAT.

Today it’s the other way around, using only software easily results in something to polished and to clean in my opinion. The grass is often greener yes, and to strike the right balance is tricky.

I assume this goes with gear swell, “If I only had…” will always apply, and is probably the biggest reason why I haven’t started doing modular synths.

For you who like me suffers from this from time to time, listening to electronic music giants Martin Gore and Vince Clarks latest collaboration is to me proof of that your gear will not make your music. It’s a shame how it all turned out.

Listen to the first released clip and read more here http://thequietus.com/articles/07500-vcmg-vince-clarke-martin-l-gore-spock

Vince clarks setup is just to die for, I would love to get the chance to use his studio for a while

Vince has done a terrific short series on Youtube called The Vince Clarke Analogue Monologues about one of his gear

http://www.youtube.com/watch?v=Rwa0hssjboA&feature=related

Martin Klang and Adrian Gierakowsky

Thursday 17/11/2011

Martin presented Taquito, an electronic wind instrument. Adrian presented a piece of software, which he has been developing since May 2010 and a multitouch iPad controller, which was especially designed to facilitate the use of this software in live performance.

Martin and Adrian performed together at the end of the presentation.

Project description

The ag.granur.suite is a modular system for sound design, composition and live performance, build around a granular synthesis engine, which enables its user to explore rhythm, timbre, melody, harmony and texture on a continuos timescale and seamlessly transition between them. Additionally, the multichannel capabilities of the software (grains can be scattered between up to 64 discrete channels) facilitate exploration of the relationships between the above musical elements and physical space. An early version of the software has already been used in live performance during concerts in London and Aldeburgh.

The iPad controller features an innovative user interface, which makes full use of the multitouch capabilities of the device, and facilitates expressive and intuitive control over the software during live performance. Due to the fact that visual feedback is displayed directly on the device, the performer doesn’t have to look at the computer screen at all, and can direct all his attention to the performance.

Adrian Gierakowski Bio

Adrian is classically trained pianist of Polish origin, a composer of instrumental, electroacoustic and electronic music and a self taught programmer. He received a degree in Creative Music Technology from the University of Huddersfield with a special award for creative programming. His work have beed performed in London (Kings Place), Aldeburgh (Snape Maltings, Britten Studio), Champaign (IL, USA), Huddersfield and York. Currently he is studying for a Masters by research degree at the University of Huddersfield. His research is focused on the use of multitouch-screen interfaces for live performance of electronic music.

Resources

software website with description and download links: http://audibledata.com/Software.html

demo of ag.granular.suite: http://vimeo.com/19108649

multitouch controller: http://vimeo.com/27190010

performance in London: http://vimeo.com/19323406

Tim Murray-Browne and Tiff Chan: Impossible Alone

IMPOSSIBLE ALONE is an interactive installation where music, movement and video-games collide, created in collaboration with Tiff Chan from Central School of Speech and Drama. A vast soundscape awaits discovery, yet can only be accessed through synchronised movement between two people. In this presentation I will talk about the ideas that led up to the installation, how it’s been received and where we’re hoping to take it.

Sam Duffy: Augmented Saxophone

Thursday 03/11/2011

Sam Duffy is a saxophone player with audio engineering experience, currently undertaking research on the Media and Arts Technology PhD programme at Queen Mary University.

She has just finished a project with British Telecom and Aldeburgh Music examining the interactions which take place within instrumental music lessons and how these interactions change when lessons take place via video conferencing. She has been considering the conflict between electronically amplified acoustic instruments and controllers for some time, started by interest in an amplified saxophone called the Varitone, made by Selmer in 1967. The natural progression of these thoughts is to make the Varitone for the new millennium. It is a project which Sam has been mulling over for some time, and the MAT programme has provided insight into ‘the how’ through Arduino and MaxMSP, but the kind gift by a friend of an old soprano saxophone to destroy means that now the dream has to be translated into reality………

Meeting summary

Sam has a background in singing and playing saxophone in bands and small ensembles. An inspiration for her work has been to find out about the Varitone, a augmented saxophone released by Selmer in 1967 that did not quite match the success of the Wah Wah pedal.

Schematics of the Varitone are available online Beware, there is also a Gibson guitar six way tone control called a varitone, to be fair they got to the name first in 1959!

Sam looks at user experiences to inform the design of her Augmented Saxophone. She’s interested in making the most of the sound of keys, breath, small movements, as well as the normal pitch sound from the instrument.

She is looking at ways to integrate sensors in a soprano sax to see how she could capture a maximum of information. She’s open to collaboration, if interested you can write to her: sam (at) sduffy.fsworld.co.uk.

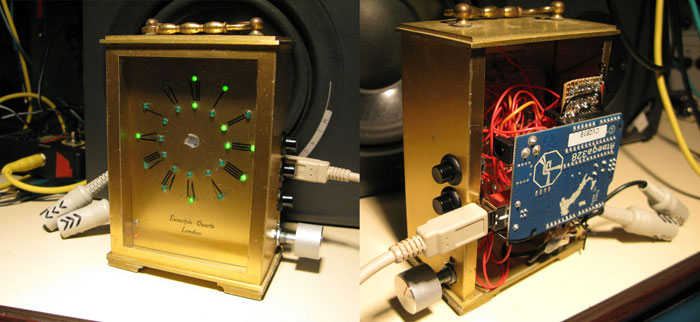

Arduino based Harmonic Clock project

Thursday 27/10/2011, Filip (Zambari)

Filip Tomaszewski aka Zambari is an audiovisual artist, working in the fields of live video, experimental electronic music, and creating workflows and tools for live and studio performers, including programming and custom hardware creations, including projects like EQ-AV (av show+software) AV-BRAIN (hardware), Harmonic Clock (hardware), TC24 (workflow), VJ loops on Archive.org and others

zambari (at) gmail dot com

http://zambari.info

The Harmonic Clock listens to incoming MIDI note commands and for the duration of the note lits up a line starting in the center and angled proportionally to its frequency ratio to the starting note (typically C but can be adjusted). That operation translates frequency intervals into angles – so one semitone equals to 30′, a fifth equals to 150′, a tritone equals to 90′ etc. This means that each chord is represented by a unique visual pattern on the face of the analyzer.

As a self-made electronic musician, I didn’t undergo a proper musical education, I know a thing or two but I would often feel that some insight into harmonic structure of a song could be useful, and not having too much spare time to spend on learning to play the classics, to build up muscle memory of intervals, I decided to try a workaround. 12-hour clock analogy came from observation that even though the mainstream music system / notation divides each octave on the keyboard into seven white notes and five black shar/flat notes for a reason, the actual math behind it calls for a simpler representation, after all the distances between each two semitones are equal on a logarithmic scale (or a bit off in some tunings but still following logarithmic order). In most wester music a 12 step division of an octave is used, after each cycle the frequency is doubled, two same notes played in different octaves will always sound good together, with most of their harmonic content overlapping, so in harmonic analysis the octave part can often be discarded.

I started with writing it as proof-of-concept software in Processing, and when I had the prototype working I realized that it would be great to build a hardware one, as hardware tends to always be around, in view, always available to be turned on, unilike software, for which you have to remember to run it, and sacrafice some screen estate and cpu/memory resources. I went to Brick Lane market the following sunday and sound a suitable metal chassis in form of an actual clock. I drilled holes for the LEDs in its face and soldered three 8-output serial-in parallel-out chips on the stripboard to serve as drivers for the LEDs. Other than a transoptor and a diode for the MIDI-in part that was the only electrical design part I had to make. The rest was just wiring all the LEDs into drilled holes (I went for two for each hour to make the line metaphore more clear). an Arduino board can be connected and disconnected easily (my stripboard serves as an Arduino shell). Software and design are open source and available at http://eq-av.com/hacl

Harmonic Clock can be used in realtime while plaing on the MIDI keyboard as a visual feedback mechanism or for reviewing recorded midi events, channel selection button on the side allows for indivitual MIDI channel selection.

Chris Jack: hydrophone recordings

On 20/10.2011, we had our first meeting wherre Chris Jack presented the results of a workshop on hydrophone recordings. Chris also demonstrated his project on brainwave music control (EEGs + Pure Data).